| This is a guest post by Liam Newman, Technical Evangelist at CloudBees. |

I’ve only been working with Pipeline for about a year. Pipeline in and of itself has been a huge improvement over old-style Jenkins projects. As a developer, it has been so great be able work with Jenkins Pipelines using the same tools I use for writing any other kind of code.

I’ve also found a number of tools that are super helpful specifically for developing pipelines. Some were easy to find like the built-in documentation and theSnippet Generator. Others were not as obvious or were only recently released. In this post, I’ll show how a few of those tools make working with Pipelines even better.

The Blue Ocean Pipeline Editor

The best way to start this list is with the most recent and coolest arrival in this space: the Blue Ocean Pipeline Editor. The editor only works with Declarative Pipelines, but it brings a sleek new user experience to writing Pipelines. My recent screencast, released as part of the Blue Ocean Launch, gives good sense of how useful the editor is:

Command-line Pipeline Linter

One of the neat features of the Blue Ocean Pipeline Editor is that it does basic validation on our Declarative Pipelines before they are even committed or Run. This feature is based on theDeclarative Pipeline Linter which can be accessed from the command-line even if you don’t have Blue Ocean installed.

When I was working on theDeclarative Pipeline: Publishing HTML Reports blog post, I was still learning the declarative syntax and I made a lot lot of mistakes. Getting quick feedback about the whether my Pipeline was in a sane state made writing that blog much easier. I wrote a simple shell script that would run my Jenkinsfile through the Declarative Pipeline Linter.

curl# curl (REST API)

# User

JENKINS_USER=bitwisenote-jenkins1

# Api key from "/me/configure" on my Jenkins instance

JENKINS_USER_KEY=--my secret, get your own--

# Url for my local Jenkins instance.

JENKINS_URL=http://$JENKINS_USER:$JENKINS_USER_KEY@localhost:32769 (1)

# JENKINS_CRUMB is needed if your Jenkins master has CRSF protection enabled (which it should)

JENKINS_CRUMB=`curl "$JENKINS_URL/crumbIssuer/api/xml?xpath=concat(//crumbRequestField,\":\",//crumb)"`

curl -X POST -H $JENKINS_CRUMB -F "jenkinsfile=<Jenkinsfile" $JENKINS_URL/pipeline-model-converter/validate| 1 | This is not secure. I’m running this locally only. See Jenkins CLI for details on how to do this securely. |

With this script, I was able to find the error in this this Pipeline without having to take the time to run it in Jenkins: (Can you spot the mistake?)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

#!groovy

pipeline {

agent any

}

options {

// Only keep the 10 most recent builds

buildDiscarder(logRotator(numToKeepStr:'10'))

}

stages {

stage ('Install') {

steps {// install required bundles

sh 'bundle install'

}

}

stage ('Build') {

steps {// build

sh 'bundle exec rake build'

}

post {

success {

// Archive the built artifacts

archive includes: 'pkg/*.gem'

}

}

}

stage ('Test') {

step {// run tests with coverage

sh 'bundle exec rake spec'

}

post {

success {

// publish html

publishHTML target: [allowMissing: false,alwaysLinkToLastBuild: false,keepAll: true,reportDir: 'coverage',reportFiles: 'index.html',reportName: 'RCov Report'

]

}

}

}

}

post {

always {

echo "Send notifications for result: ${currentBuild.result}"

}

}

}

When I ran my pipelint.sh script on this pipeline it reported this error:

$ pipelint.sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 46 100 46 0 0 3831 0 --:--:-- --:--:-- --:--:-- 4181

Errors encountered validating Jenkinsfile:

WorkflowScript: 30: Unknown stage section "step". Starting with version 0.5, steps in a stage must be in a steps block. @ line 30, column 5.

stage ('Test') {

^

WorkflowScript: 30: Nothing to execute within stage "Test" @ line 34, column 5.

stage ('Test') {

^Doh. I forgot the "s" on steps on line 35. Once I added the "s" and ranpipelint.sh again, I got an all clear.

$ pipelint.sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 46 100 46 0 0 5610 0 --:--:-- --:--:-- --:--:-- 5750

Jenkinsfile successfully validated.This didn’t mean there weren’t other errors, but for a two second smoke test I’ll take it.

Replay

I love being able to use source control to track changes to my Pipelines right alongside the rest of the code in a project. There are also times, when prototyping or debugging, that I need to iterate quickly on a series of possible Pipeline changes. The Replay feature let’s me do that and see the results, without committing those changes to source control.

When I wanted to take the previous Pipeline from agent any to using Docker via

the docker { ... } directive, I used the Replay feature to test it out:

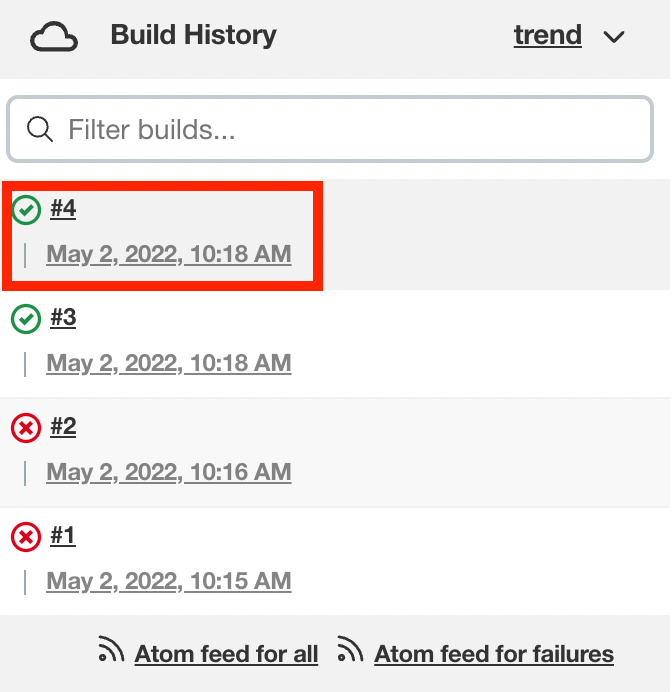

Selected the previously completed run in the build history

![Previous Pipeline Run]()

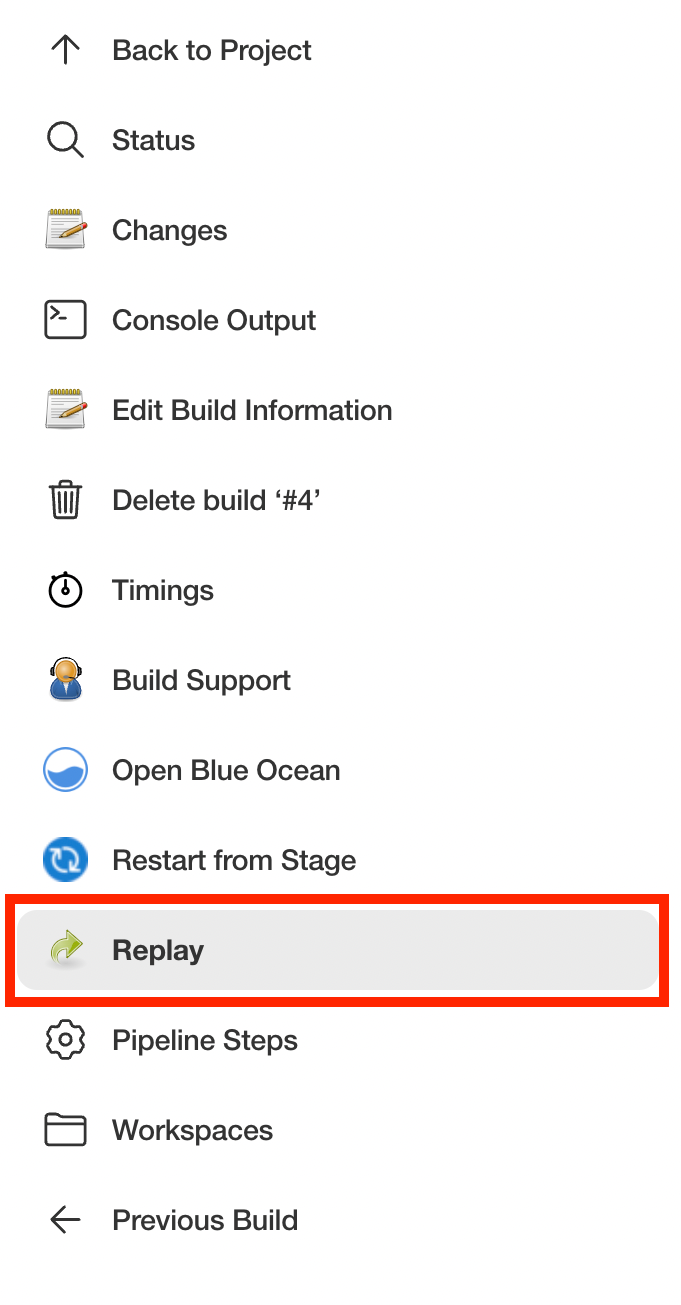

Clicked "Replay" in the left menu

![Replay Left-menu Button]()

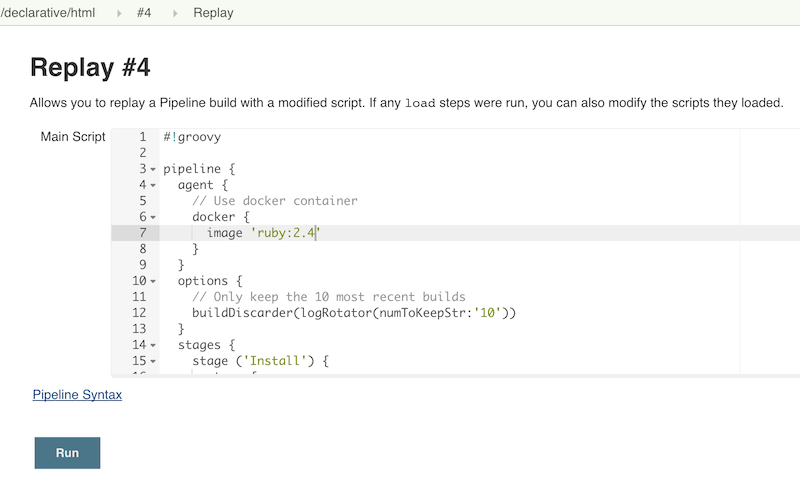

Made modifications and click "Run". In this example, I replaced

anywith thedocker { ... }directive.![Replay Left-menu Button]()

Checked the results of changes looked good.

Once I worked any bugs out of my Pipeline,

I used Replay to view the Pipeline for the last run and copy it back to myJenkinsfile and create a commit for that change.

Conclusion

This is far from a complete list of the tools out there for working with Pipeline. There are many more and the number is growing. For example, one tool I just recently heard about and haven’t had a chance to delve into is the Pipeline Unit Testing Framework, which promises the ability to test Pipelines before running them. It’s been a fun year and I can’t wait to see what the next year holds for Pipeline.