Hello, After an eventful community bonding period we finally entered into the coding phase. This blog post will summarize the work done till the midterm of the coding phases i.e. week 6. If some of the topics here require a more detailed explanation, I will write a separate blog post. These blogs posts will not have a very defined format but would cover all of the user stories or features implemented.

Project Summary

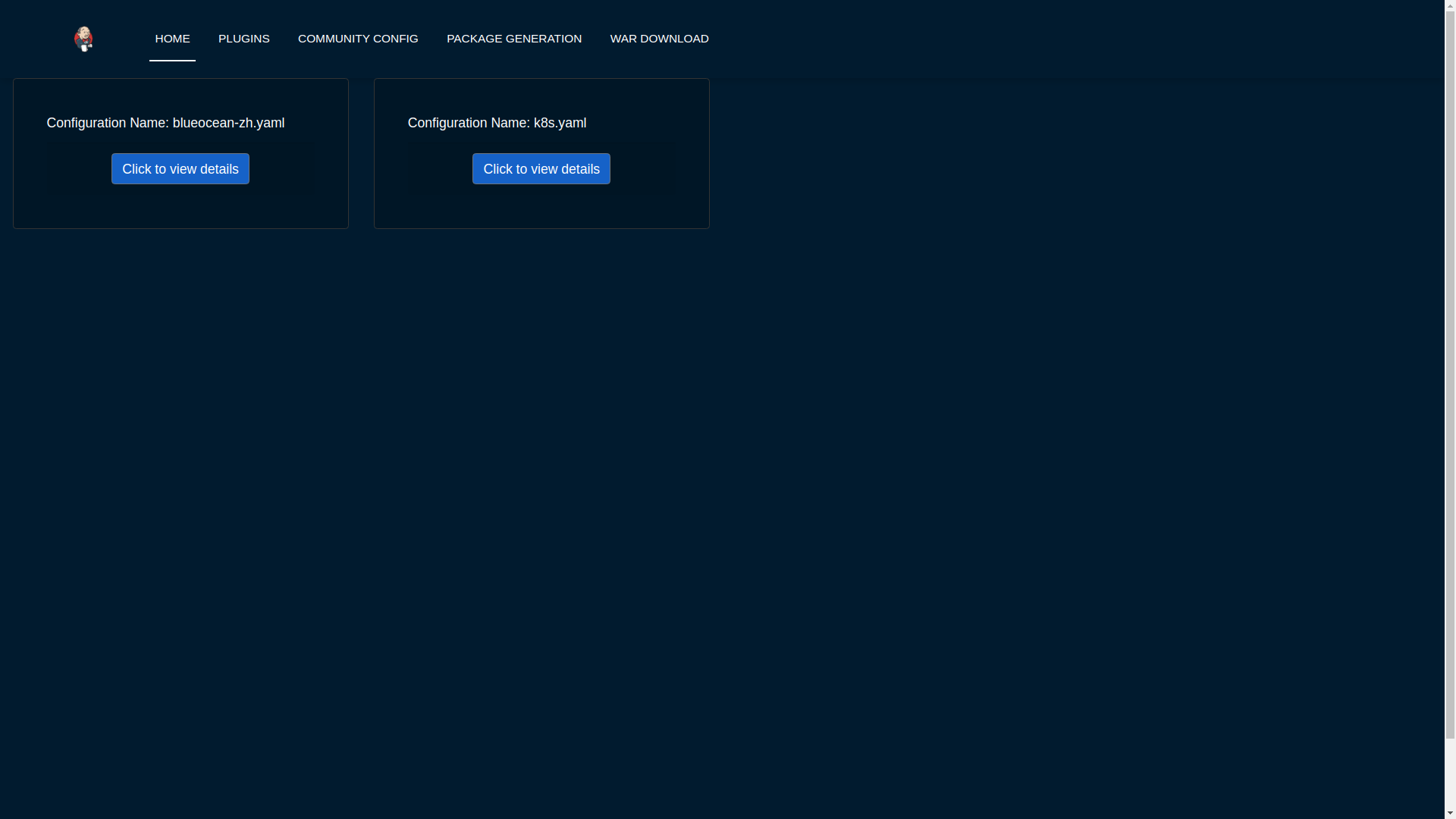

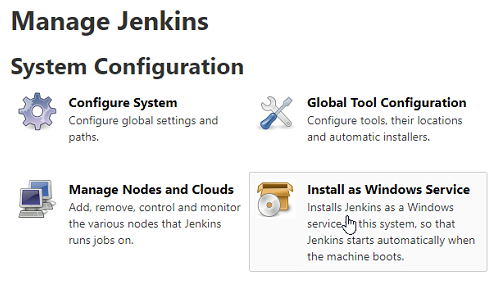

The main idea behind the project is to build a customizable jenkins distribution service that could be used to build tailor-made jenkins distributions. The service would provide users with a simple interface to select the configurations they want to build the instance with eg: plugins, authorization matrices etc. Furthermore it would include a section for sharing community created distros so that users can find and download already built jenkins war/configuration files to use out of the box.

Quick review

Pull Requests Opened | 38 |

Github Issues completed | 36 |