Congratulations to all GSoC students who have made it through the first half of the GSoC 2021 coding phase!

This year, the Jenkins project has been participating in GSoC as part of the Continuous Delivery Foundation’s GSoC org. To celebrate our GSoC students and the fantastic work they have been doing, the CDF is hosting an online meetup where students will present their work. Students will be showcasing what they have learned and accomplished thus far in GSoC, demoing their work, and discussing their goals and plans for the second coding phase.

The CDF Google Summer of Code Midterm Demos will be held online on July 20th, 13:00 UTC - 15:00 UTC.

Sign up here: Meetup Event

Speakers

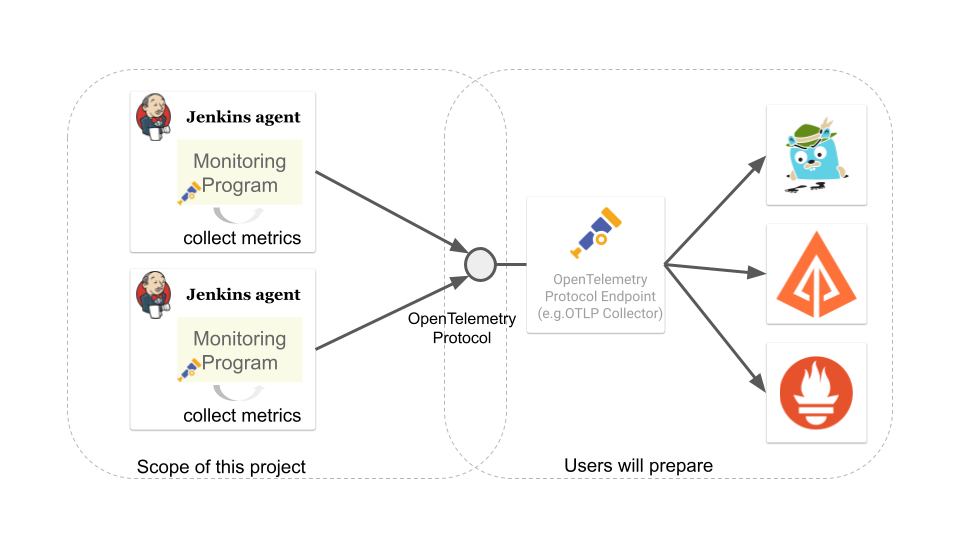

Akihiro Kiuchi - Jenkins Remoting Monitoring

Akihiro is a student in the Department of information and communication engineering at the University of Tokyo. He is improving the monitoring experience of Jenkins Remoting during Google Summer of Code 2021.

Affiliation: The University of Tokyo and Jenkins project

GitHub: Aki-7

Title: Jenkins Remoting Monitoring with OpenTelemetry

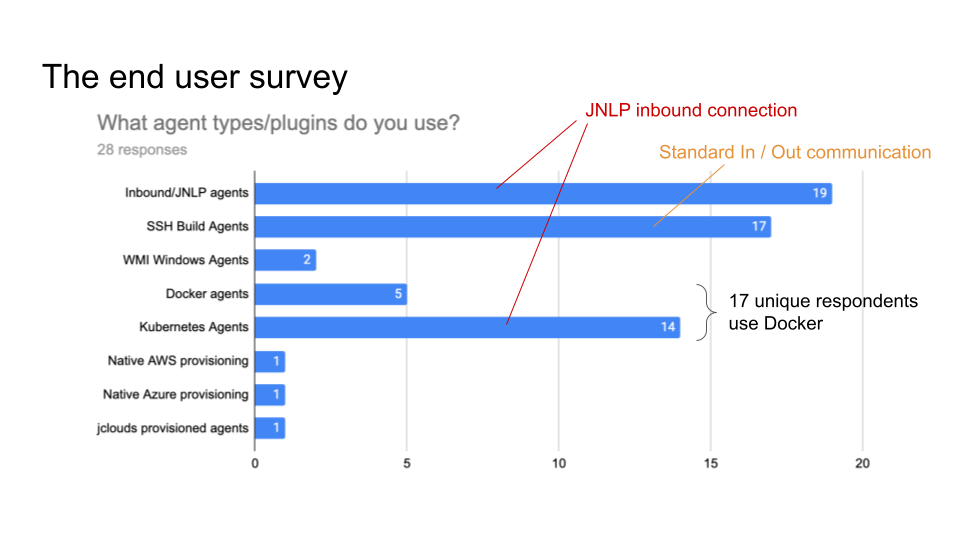

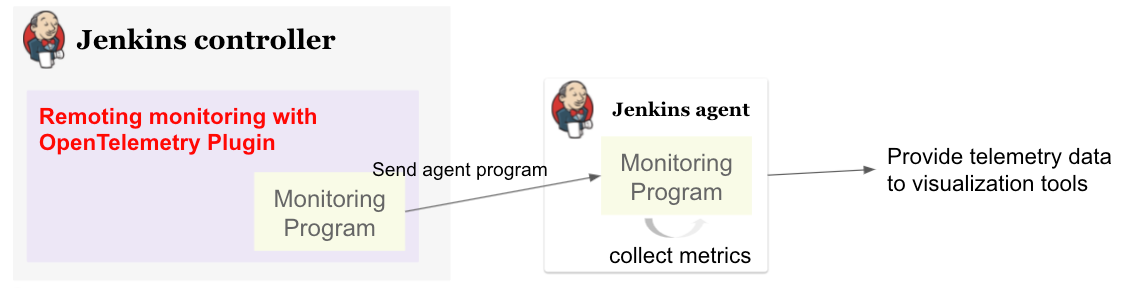

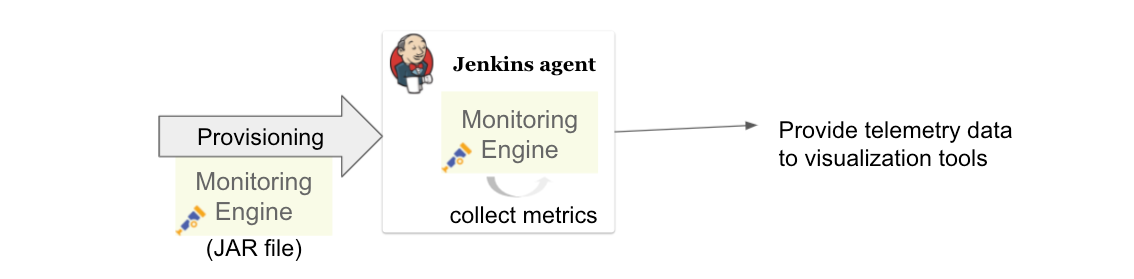

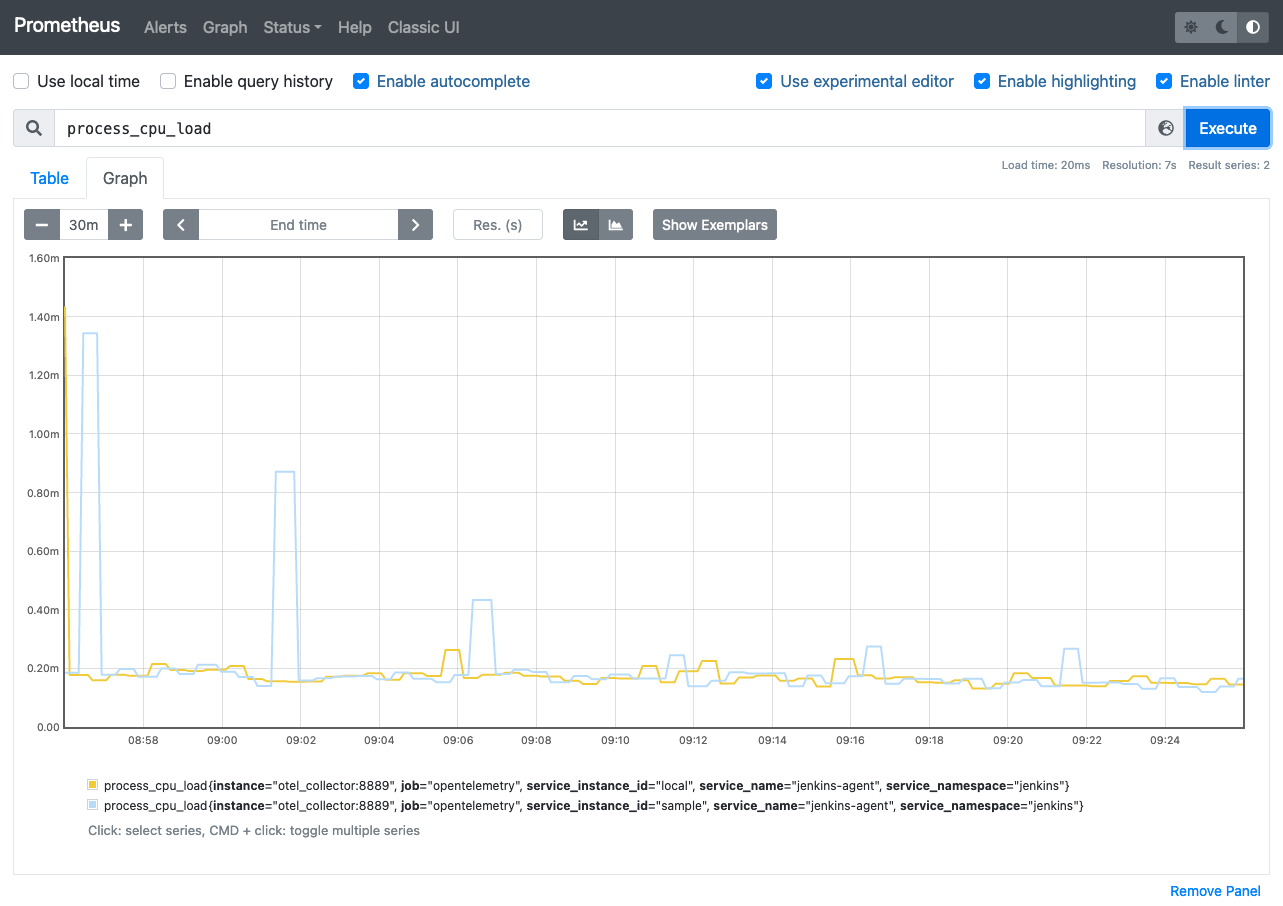

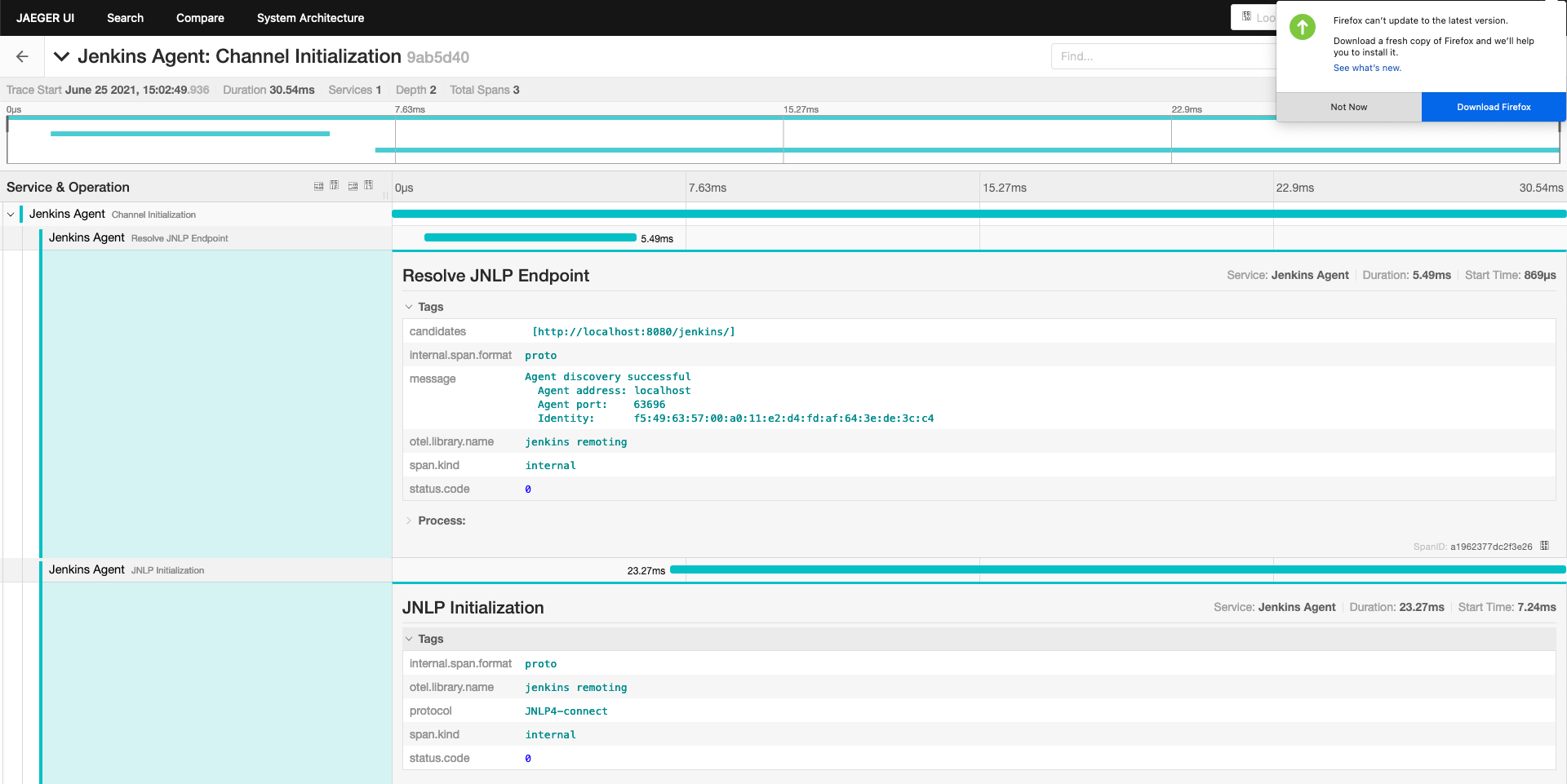

In this talk, he will discuss the problems in maintaining Jenkins agents and how to support Jenkins admins in troubleshooting them. As one of the solutions, he will introduce the new Remoting monitoring with OpenTelemetry plugin that collects Jenkins Remoting monitoring data and troubleshooting data using OpenTelemetry. What kind of data the plugin will collect and how we will be able to visualize them using available open-source monitoring tools will be demonstrated.

Shruti Chaturvedi - CloudEvents Plugin for Jenkins

Shruti is an undergrad student of Computer Science at Kalamazoo College. She is developing a CloudEvents integration for Jenkins, allowing other CloudEvents-compliant CI/CD tools to communicate easily. Shruti is also the Founding Engineer of a California-based startup, MeetKlara, where she is building serverless solutions and advocating for developing CI/CD pipelines using open-source tools.

Affiliation: Kalamazoo College and Jenkins project

GitHub: ShrutiC-git

LinkedIn: Shruti Chaturvedi

Title: CloudEvents Plugin for Jenkins: Moving Towards Interoperability

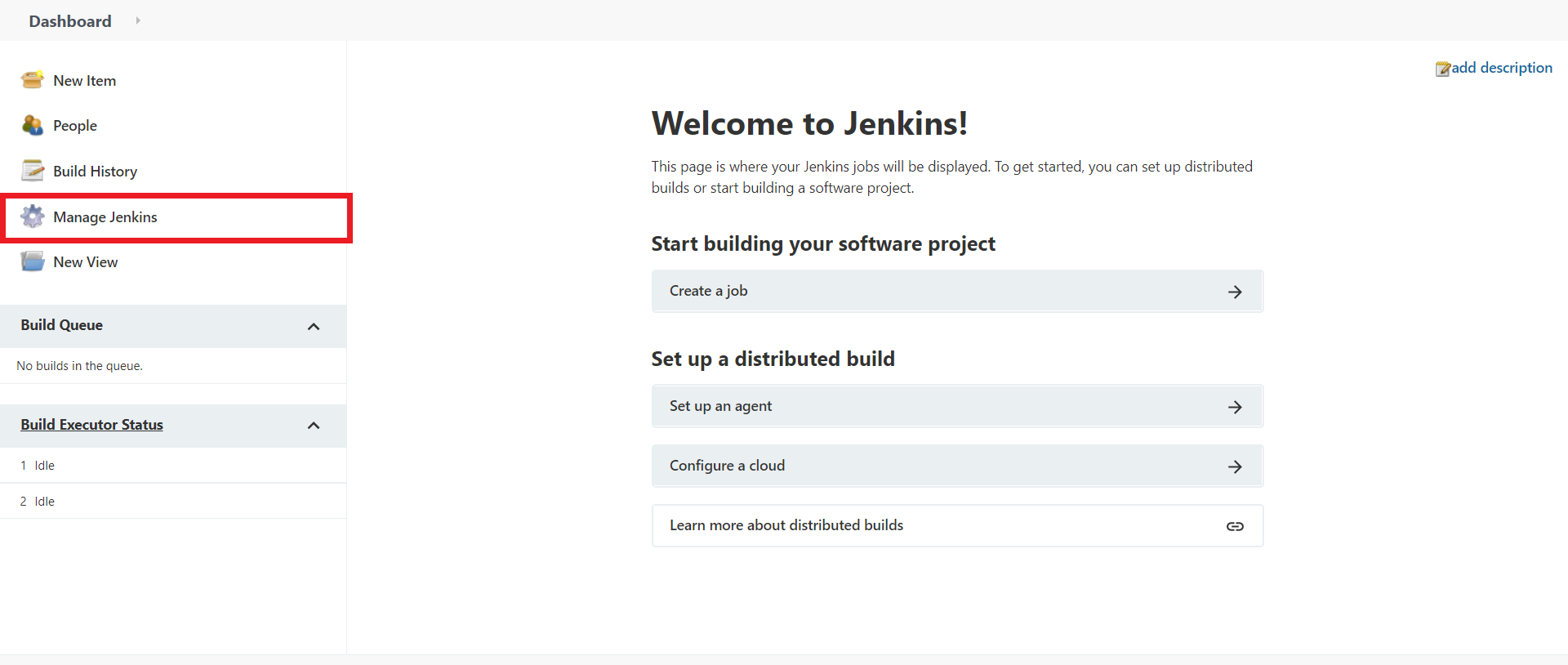

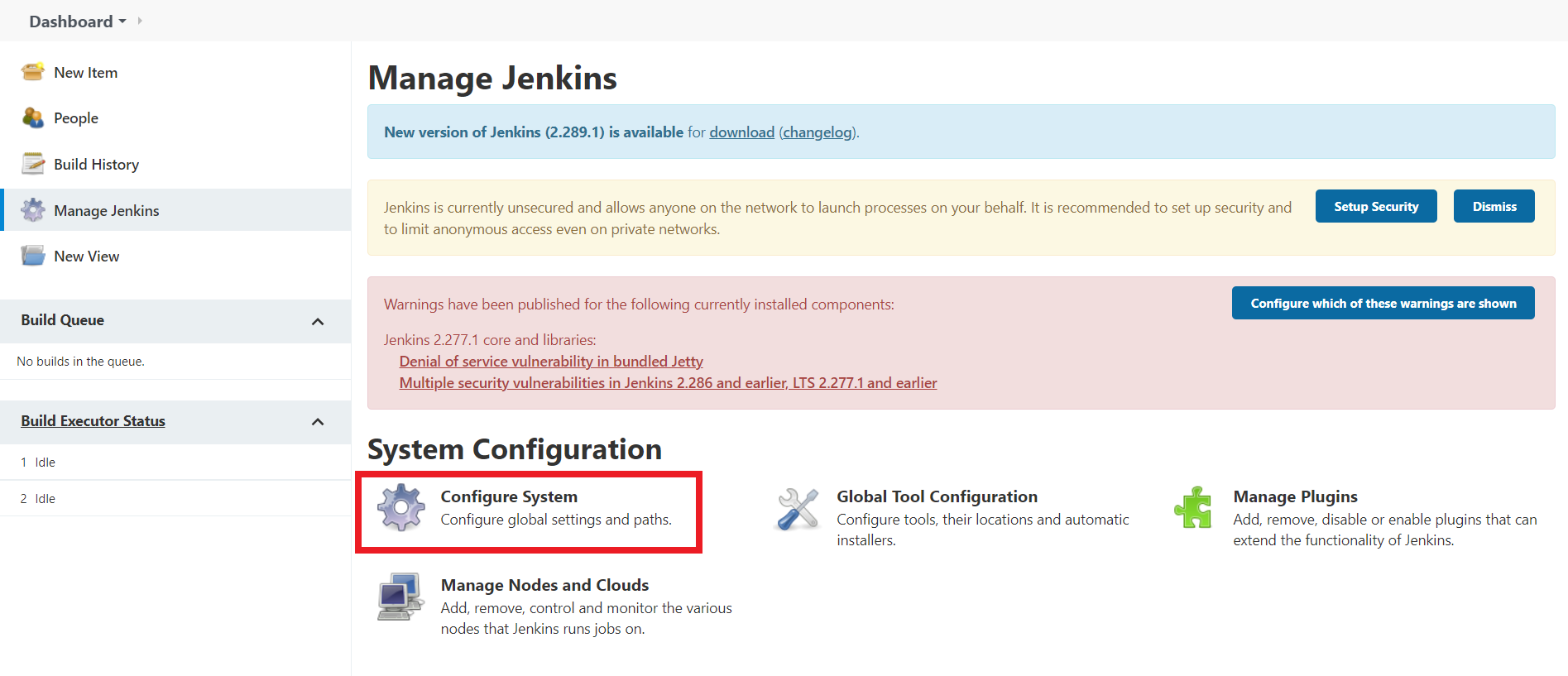

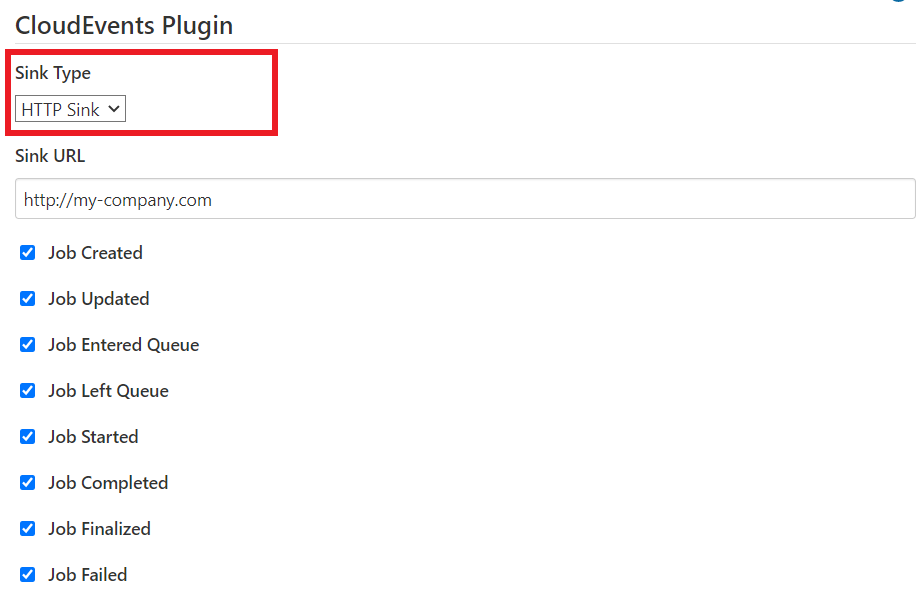

In this talk, we will look at interoperability as an essential element in building workloads across several services. We will also talk about how CloudEvents solves one of the biggest challenges in achieving interoperability between systems: lack of normalization/standardization. Without any standard definition, in order to achieve interoperability, services have to develop adapters specific to a particular system. That, however, is complex because services are always changing the way data/events are emitted. CloudEvents solves this problem by defining a standard format for events, which can be emitted/consumed agnostically, thereby achieving indirect interoperability. Shruti will demonstrate the workings of CloudEvents Plugin for Jenkins; she will walk us through how Jenkins can be configured as a source and a sink, emitting and consuming CloudEvents-compliant events in a platform-independent manner.

Daniel Ko - try.spinnaker.io

Daniel is studying computer science at the University of Wisconsin - Madison. He is developing a public Spinnaker sandbox environment for Google Summer of Code 2021.

Affiliation: University of Wisconsin - Madison and Spinnaker project

GitHub: ko28

Title: try.spinnaker.io: Explore Spinnaker in a Sandbox Environment!

The talk will go through a brief explanation of Spinnaker and the challenges that users face during the installation process. He will discuss the infrastructure of this project and how a public multi tenant spinnaker instance will be managed and installed. We will end with a demo of the site so far and the various features implemented, including Github authentication, K8s manifest deployment, AWS Load Balancer Controller to expose deployments, private ECR registry and the blocking of all public images, and auto resource cleanup.

Aditya Srivastava - Conventional Commits Plugin for Jenkins

Aditya is a curiosity driven individual striving to find ingenious solutions to real-world problems. He is an open-source enthusiast and a lifelong learner. Aditya is also the Co-Founder and Maintainer of an Open Source Organization - Auto-DL, where he’s leading the development of a Deep Learning Platform as a Service application.

Title: Conventional Commits Plugin for Jenkins

In this talk, we’ll start with what are conventional commits and why they are needed. Then we’ll see what the jenkins plugin, "Conventional Commits" is and what goal it is trying to achieve. A demo of how the plugin can be used/integrated in the current workflow will be shown. Finally, we’ll talk about the next steps in plugin development followed by the QnA.

Harshit Chopra - Git credentials binding for sh, bat, and powershell

Harshit Chopra is a recent graduate and is currently working on a Jenkins project which brings the authentication support for cli git commands in a pipeline job and freestyle project.

Affiliation: Punjab University & Jenkins Project

GitHub: link: arpoch

LinkedIn: Harshit Chopra

Title: Git credentials binding for sh, bat, and powershell

In this talk, he will give an overview of the project and will move on further explaining what problems are being faced, a bit about the workaround that are being used to tackle the problems, what makes the authentication support so important, why a feature and not a plugin in itself, accomplishments achieved and work done during the coding phase 1, will talk about the implementation of the feature, demonstration of git authentication support over HTTP protocol.

Pulkit Sharma - Security Validator for Jenkins Kubernetes Operator

Pulkit is a student at Indian Institute of Technology,BHU,Varanasi. He is working on a GSoC Project under Jenkins where he aims to add a security validator to the Jenkins Kubernetes Operator.

Affiliation: Indian Institute of Technology, BHU and Jenkins Project.

GitHub: sharmapulkit04

Title: Security Validator for Jenkins Kubernetes Operator

In this talk, we will discuss why we need a security validator for the Jenkins Kubernetes Operator and how we are going to implement it via admission webhooks. We will have a look at how we are going to implement the validation webhook, the validation logic being used and what tools we are using to achieve it. Pulkit will showcase his progress and will discuss his future plans for phase 2 and beyond as well.