While at Jenkins World, Kohsuke Kawaguchi presented two long-time Jenkins contributors with a "Small Matter of Programming" award: Andrew Bayer andJesse Glick. "Small Matter of Programming" being:

a phrase used to ironically indicate that a suggested feature or design change would in fact require a great deal of effort; it often implies that the person proposing the feature underestimates its cost.

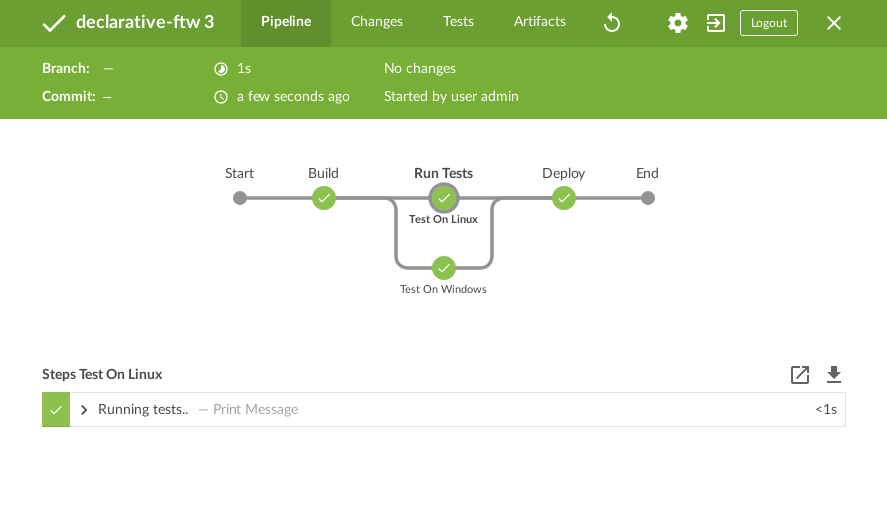

In this context the "Small Matter" relates to Jenkins Pipeline and a very simple snippet of Scripted Pipeline:

[1, 2, 3].each { println it }For a long time in Scripted Pipeline, this simply did not work as users would expect it. Originally filed asJENKINS-26481 in 2015, it became one of the most voted for, and watched, tickets in the entire issue tracker until it was ultimately fixed earlier this year.

At least some closures are executed only once inside of Groovy CPS DSL scripts managed by the workflow plugin.

At a high level, what has been confusing for many users is that Scripted Pipeline looks like a Groovy, it quacks like a Groovy, but it’s not exactly Groovy. Rather, there’s an custom Groovy interpreter (CPS) that executes the Scripted Pipeline in a manner which provides the durability/resumability that defines Jenkins Pipeline.

Without diving into too much detail, refer to the pull requests linked to JENKINS-26481 for that, the code snippet above was particularly challenging to rectify inside the Pipeline execution layer. As one of the chief architects for Jenkins Pipeline, Jesse made a number of changes around the problem in 2016, but it wasn’t until early 2017 when Andrew, working on Declarative Pipeline, started to identify a number of areas of improvement in CPS and provided multiple patches and test cases.

As luck would have it, combining two of the sharpest minds in the Jenkins project resulted in the "Small Matter of Programming" being finished, and released in May of this year with Pipeline: Groovy 2.33.

Please join me in congratulating, and thanking, Andrew and Jesse for their diligent and hard work smashing one of the most despised bugs in Jenkins history :).