November 2022

Welcome to the Jenkins Newsletter! This is a compilation of progress within the project, highlighted by Jenkins Special Interest Groups (SIGs) for the month of November.

Got Inspiration? We would love to highlight your cool Jenkins innovations. Share your story and you could be in the next Jenkins newsletter. View previous editions of the Jenkins Newsletter here!

Happy reading!

Highlights:

Congratulations to the new officers and members of the governance board.

Pipeline Utility Steps Plugin was impacted by CVE-2022-33980, leading to a remote code execution in Jenkins.

New "jenkinsci" NPM official account for Jenkins project.

ssh-agent docker image is now using a correct volume for agent workspace.

Jenkins 2.375.1 was released with more improvements to the user interface.

Jenkins.io is now using Algolia v3 for its search feature.

Jenkins in Google Summer of Code 2023, preparations to help new contributors prepare effective proposals.

Governance Update

Contributed by: Mark Waite

The 2022 Jenkins elections are complete. Thanks to Kevin Martens for the blog post announcing the results of the election and thanks to Damien Duportal for running the election process. We are glad to have received 65 registrations to vote in the election. That is less than the 81 registered voters we had in 2021.

Elected officers and members of the Jenkins governance board will begin their service December 3, 2022. Special thanks to the members of the governance board that have completed their two-year service:

Congratulations to the new members of the governance board.

Congratulations also to the newly elected officers:

Release Officer - timja

Events Officer - Alyssa Tong

Security Officer - Wadeck Follonier

Documentation Officer - Kevin Martens

Infrastructure Officer - Damien Duportal

Governance board meetings are held every two weeks. Meeting notes and recordings of the meetings are available on the Jenkins community site.

Security Update

Contributed by: Wadeck Follonier

One security advisory during November about plugins

Usually the vulnerabilities in third party dependencies are not impacting the final product, it is worth mentioning when there is a true positive case. Pipeline Utility Steps Plugin was impacted by CVE-2022-33980, leading to a remote code execution in Jenkins.

Infrastructure Update

Contributed by: Damien Duportal

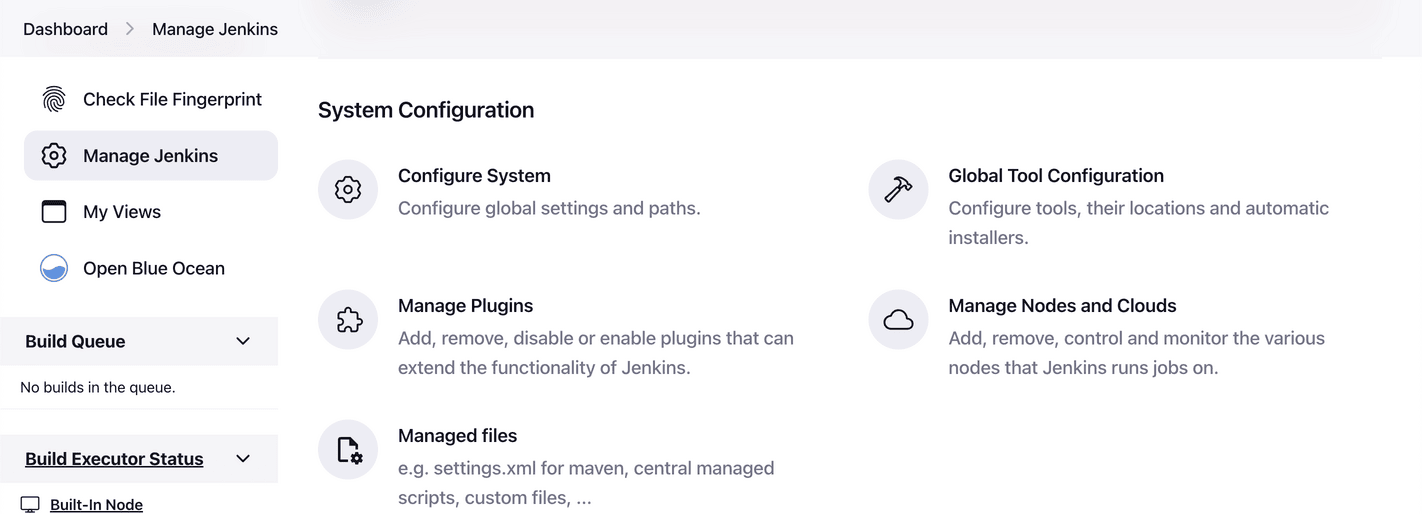

Upgrade of our controllers to the latest LTS

2.375.1.New "jenkinsci" NPM official account for Jenkins project.

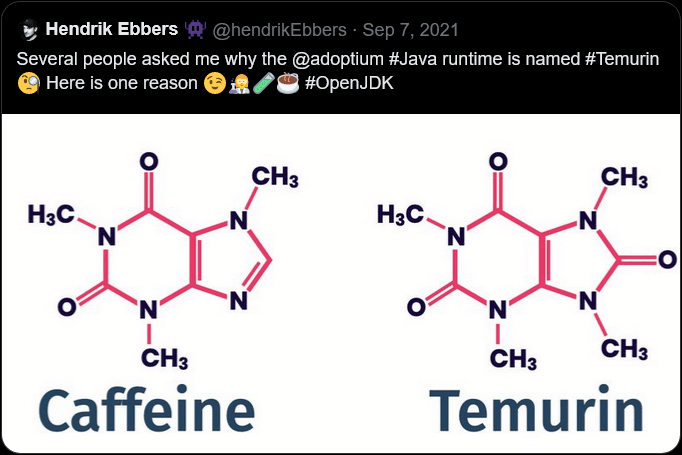

JDK17 for Windows provided to developers.

https://meetings.jenkins-ci.org/ website (archive Board meeting minutes) is recovered and back.

Deprecated "windows-slaves" plugin removed.

Azure networks are now code-managed to ease management (future support of IPv6 and network performances)

Platform Update

Contributed by: Bruno Verachten

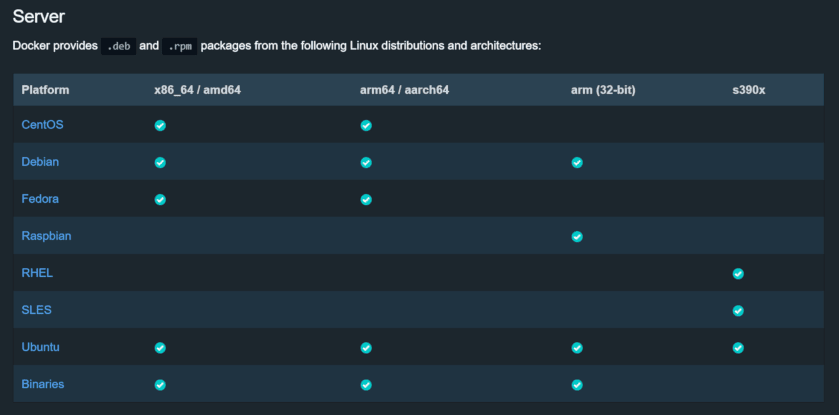

Docker Images for Jenkins changes:

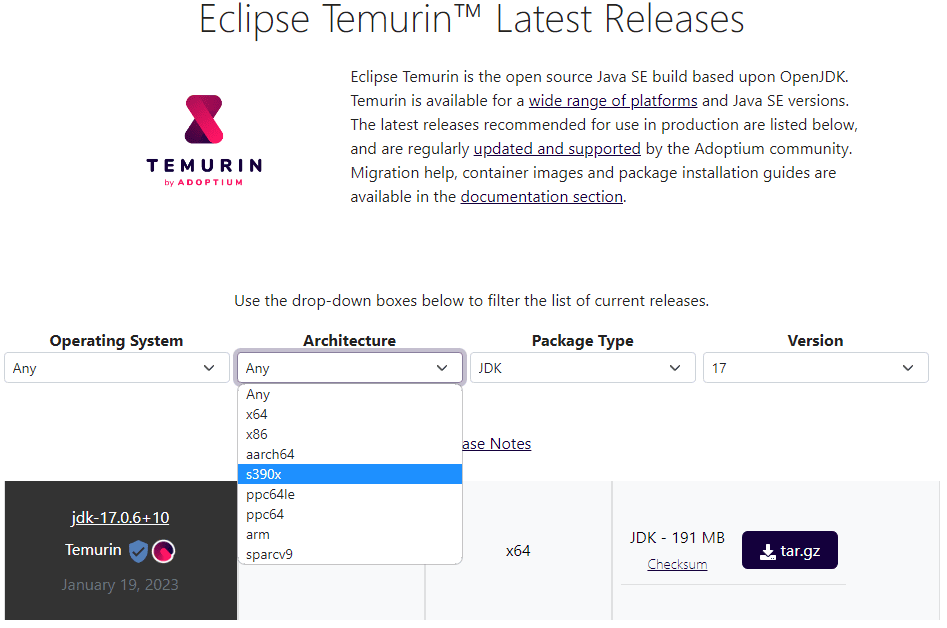

JDK17: added ARMv7 support.

Monthly Base OS updates and autumn cleanup.

Huge work (not finished yet) to ensure “latest” version being overridden by older releases.

Automatic tracking of remoting and JDK versions implemented: we will get faster updates.

A LOT of fixes (missing packages such as curl, ssh, git, less, patch).

ssh-agent is now using correct volume for agent workspace.

New platforms:

Experiments with Windows 2022: working with Jenkins Infrastructure to build these images.

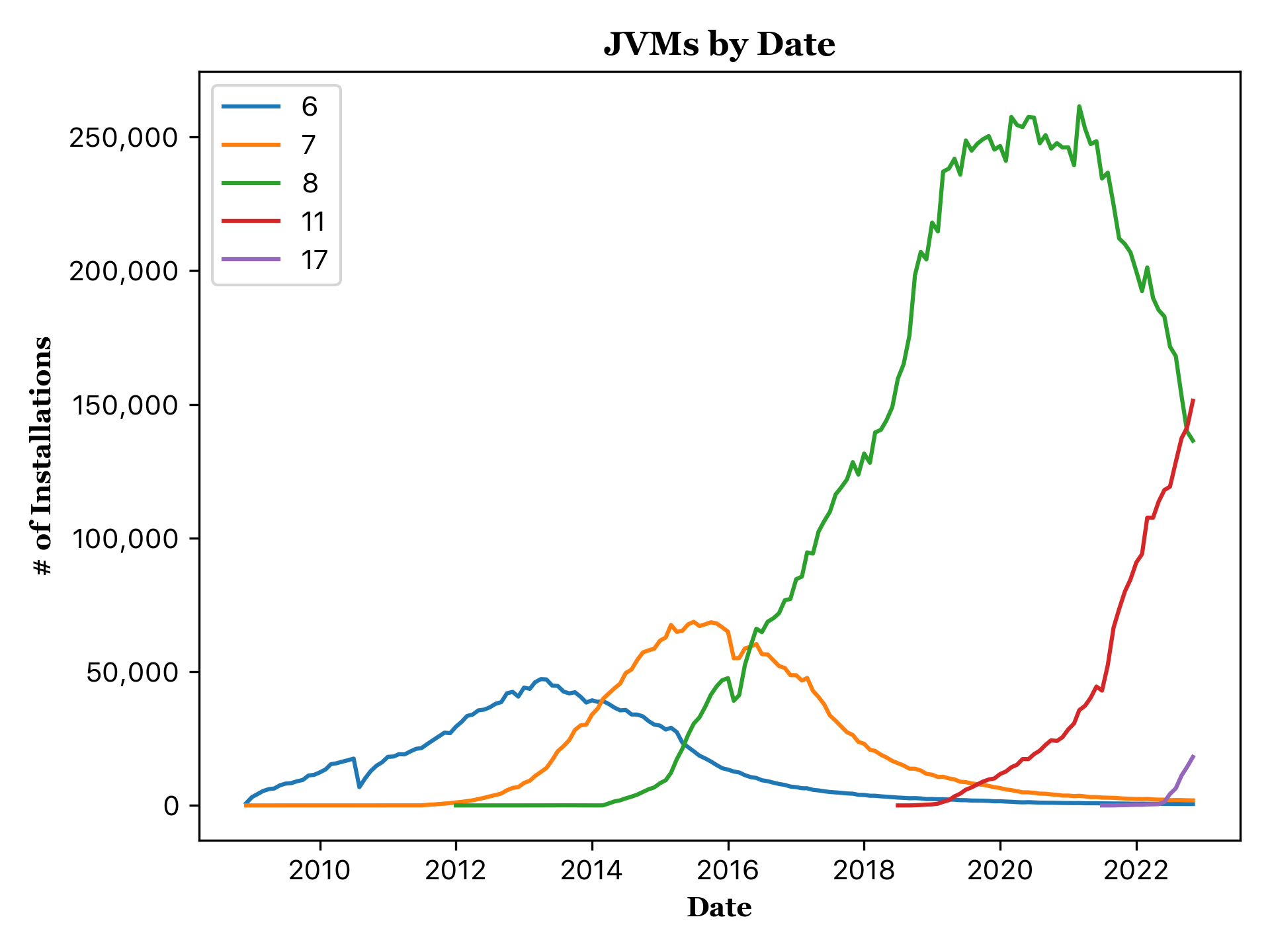

Experiments with JDK19: ANTLR 2 to ANTLR 4 transition complete, Jenkins core compiles.

User Experience Update

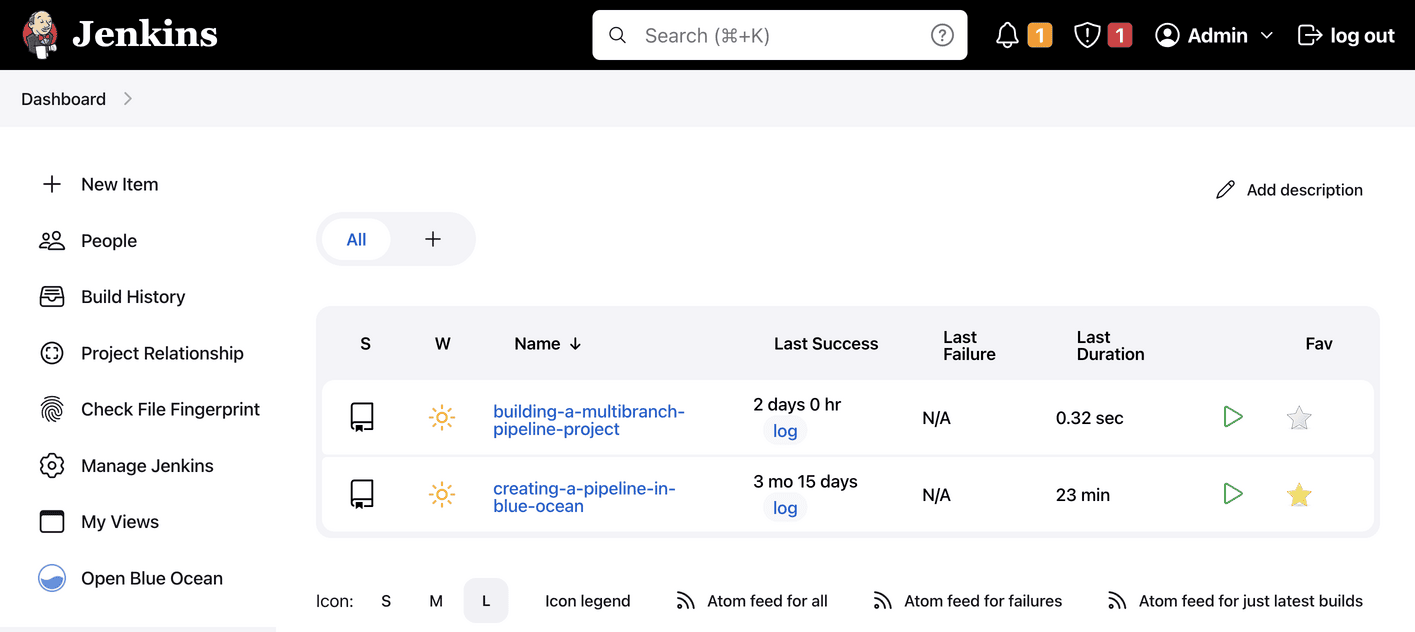

Contributed by: Mark Waite

Jenkins 2.375.1 was released on November 30, 2022 with more improvements to the user interface. Special thanks to Jan Faracik, Tim Jacomb, Alexander Brandes, Wadeck Follonier, Daniel Beck, and many others that were involved in the most recent improvements to the user experience.

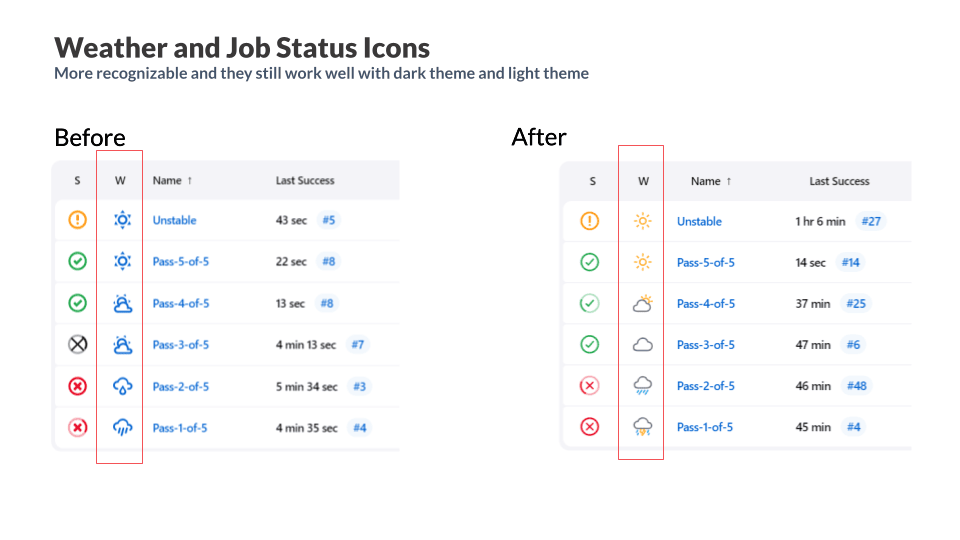

The weather icons have been updated to be more recognizable. They continue to work well in both light themes and dark themes.

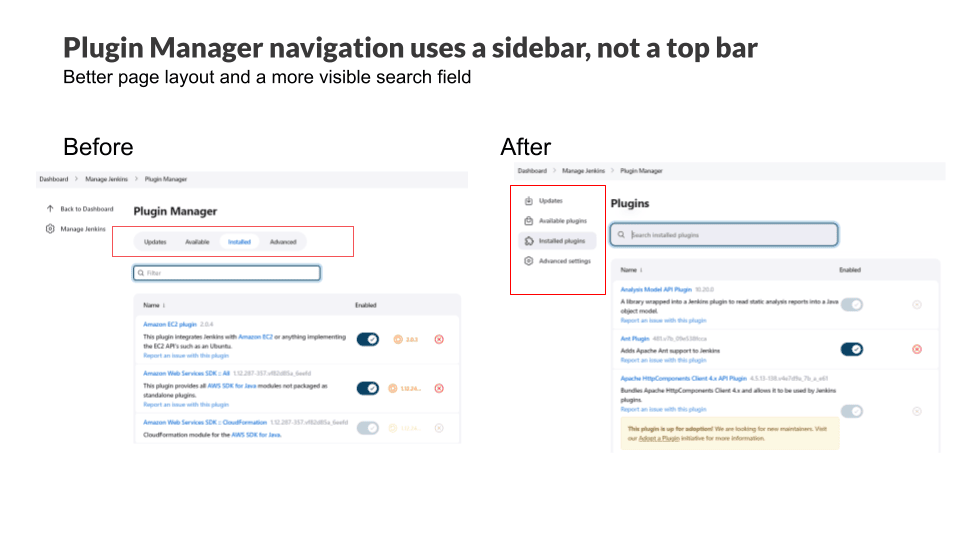

The plugin manager navigation has moved from the top of the page to the side panel. The search field is more visible.

The User Experience SIG is also pleased to note a valuable improvement in the Jenkins 2.380 weekly release. The tooltips that were previously provided by the unmaintained and long outdated YahooUI JavaScript library are now being provided by the Tippy.js JavaScript framework. Special thanks to Jan Faracik for his work removing that use of the YahooUI JavaScript library.

Documentation Update

Contributed by: Kevin Martens

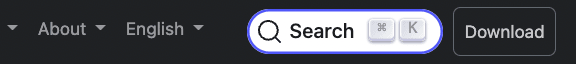

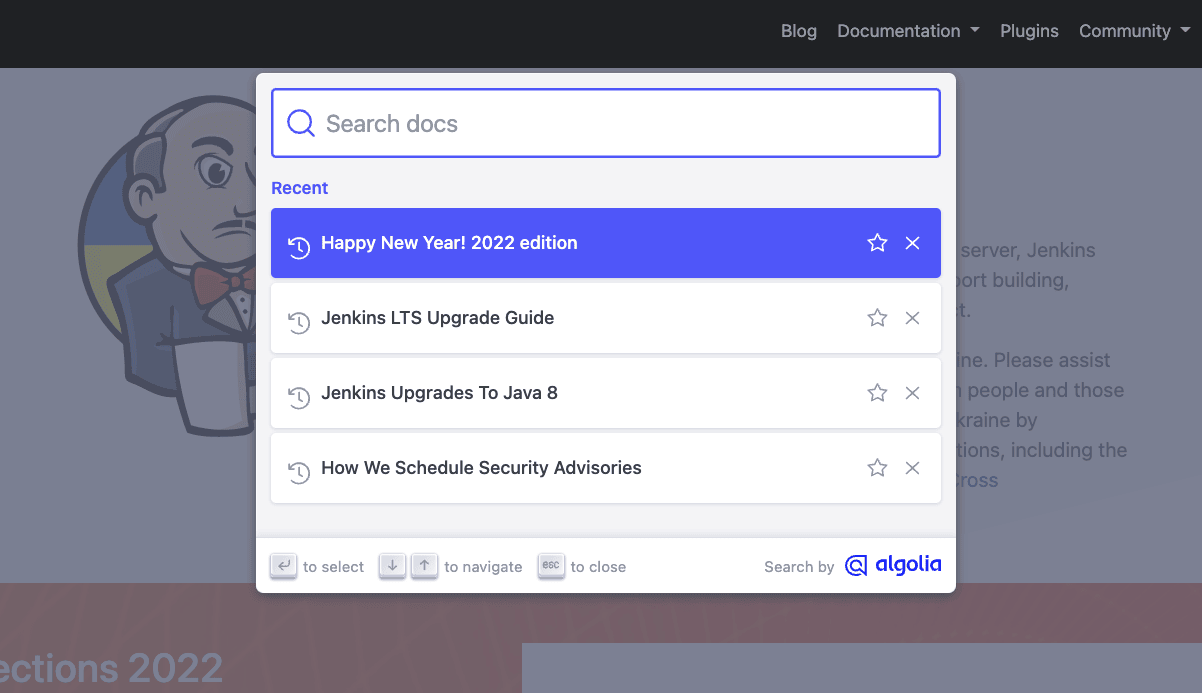

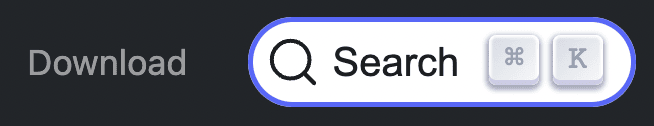

Jenkins.io is now using Algolia v3 for its search feature. This update has not only improved searching on the Jenkins site, but also provided a new search UI, which provides helpful suggestions. Massive thanks to Gavin Mogan for working on this and improving the Jenkins.io search.

Algolia has graciously upgraded our search from their legacy v2 to the super pretty and useful v3 apis. This includes a new fully accessible popup. I just love being able to goto jenkins.io and hitting ctrl+k to search.

said Gavin Mogan, current Jenkins Board Member, maintainer of the Jenkins plugin site, and plugin site API.

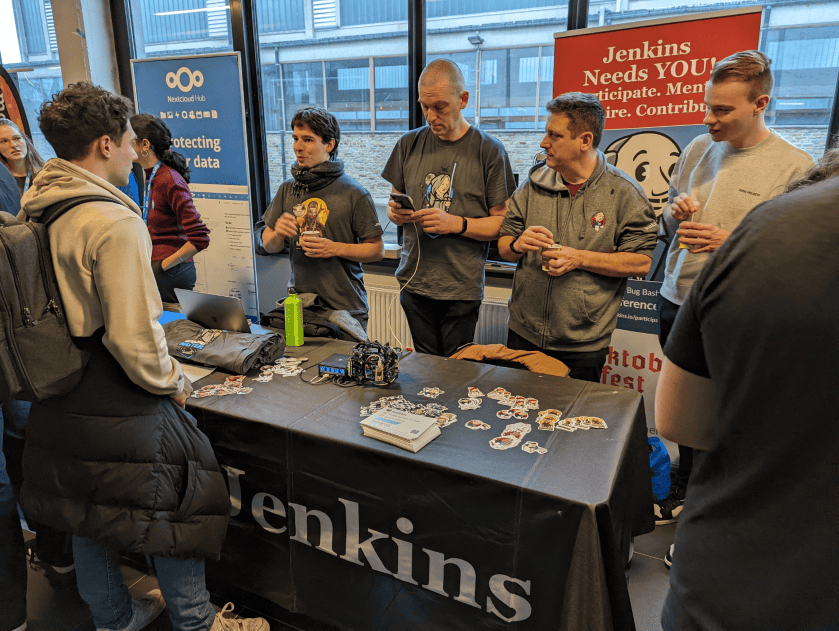

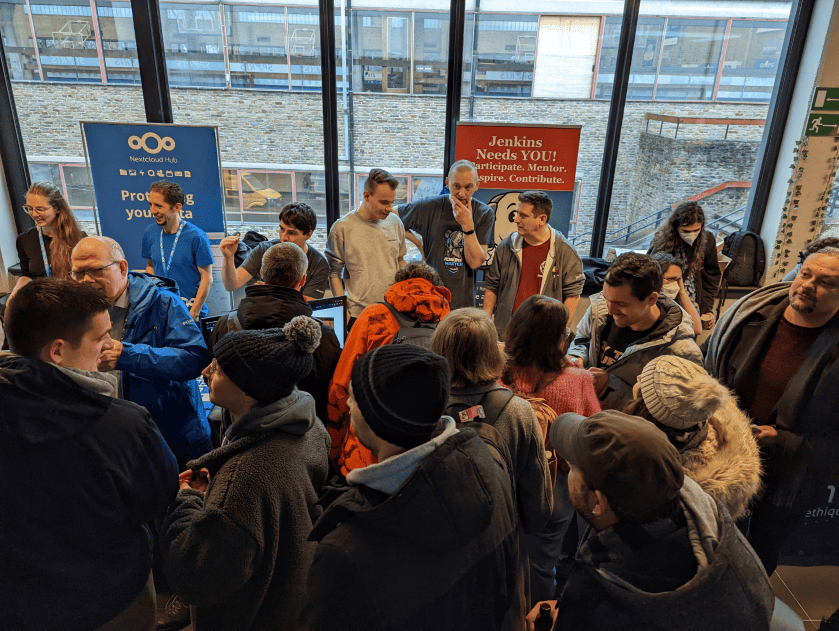

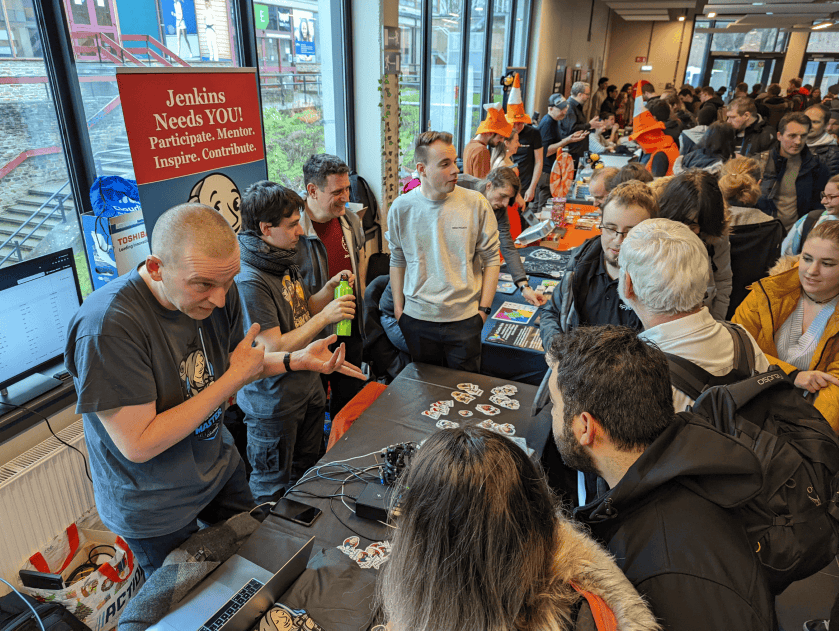

Advocacy & Outreach Update

Contributed by: Alyssa Tong

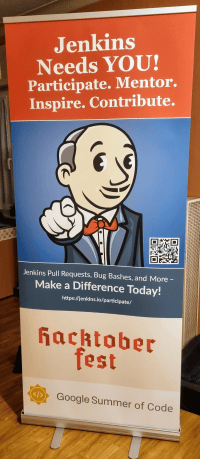

Jenkins gets ready for Google Summer of Code 2023!

Google recently announced the GSoC 2023 program timeline, and the Advocacy & Outreach SIG has responded! We’ve established the GSoC early preparations for applicants - steps to effective submission post to help future contributors with the process. On December 20, 2022 at 4PM UTC there will be a walk through of this process via a webinar. We would like this to be an interactive webinar so bring your questions. See Event Calendar (See GSoC 2023 - Contributor webinar: How to get ready) for login details.

We are still in great need of project idea proposals and mentors.

GSoC project ideas are coding projects that potential GSoC contributors can accomplish in 10-22 weeks.

The coding projects can be new features, plugins, test frameworks, infrastructure, etc. Anyone can submit a project idea.

Mentoring takes about 5 to 8 hours of work per week (more at the start, less at the end).

Mentors provide guidance, coaching, and sometimes a bit of cheerleading. They review GSoC contributor proposals, pull requests and contributor presentations at the evaluation phase.

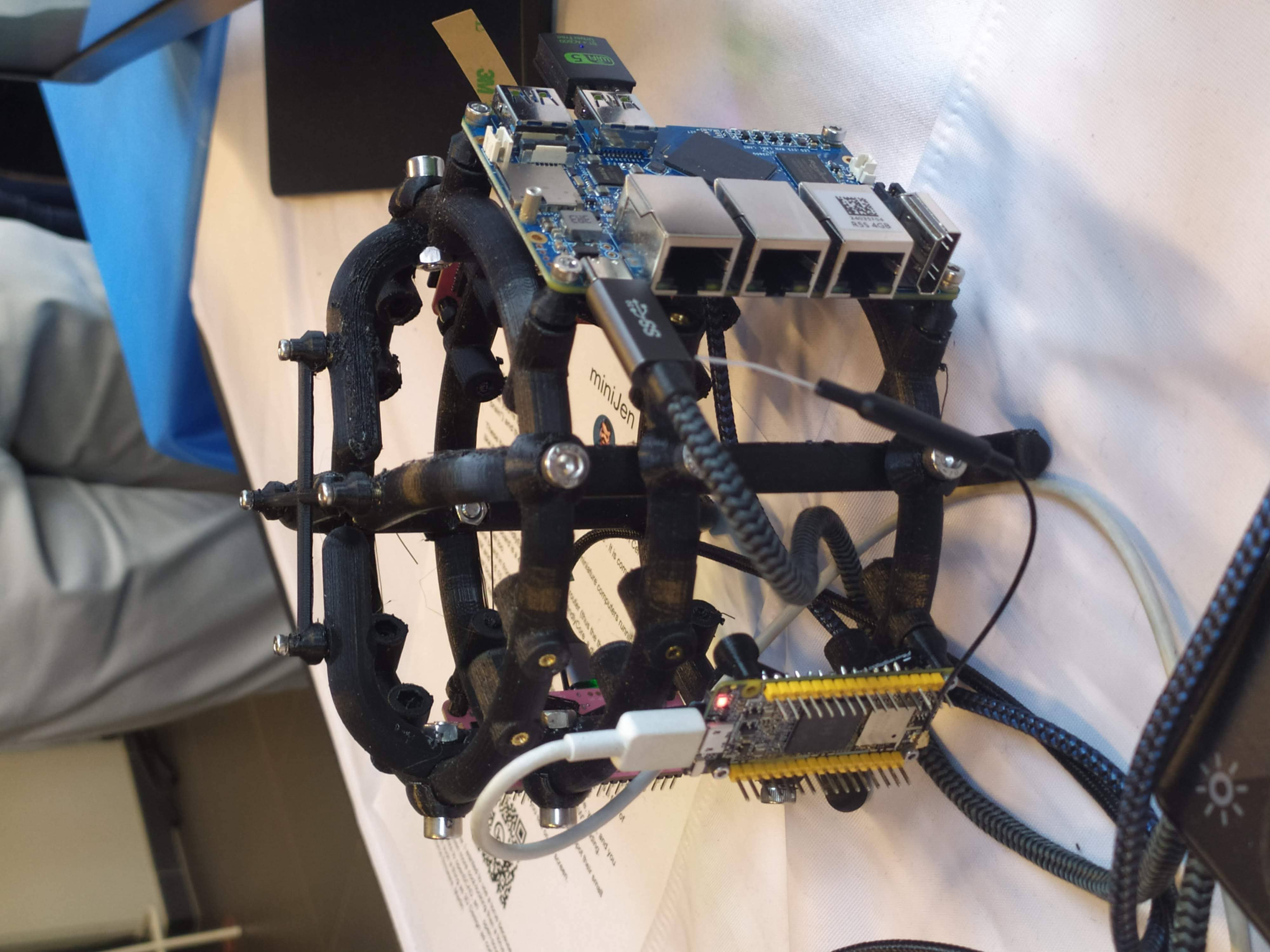

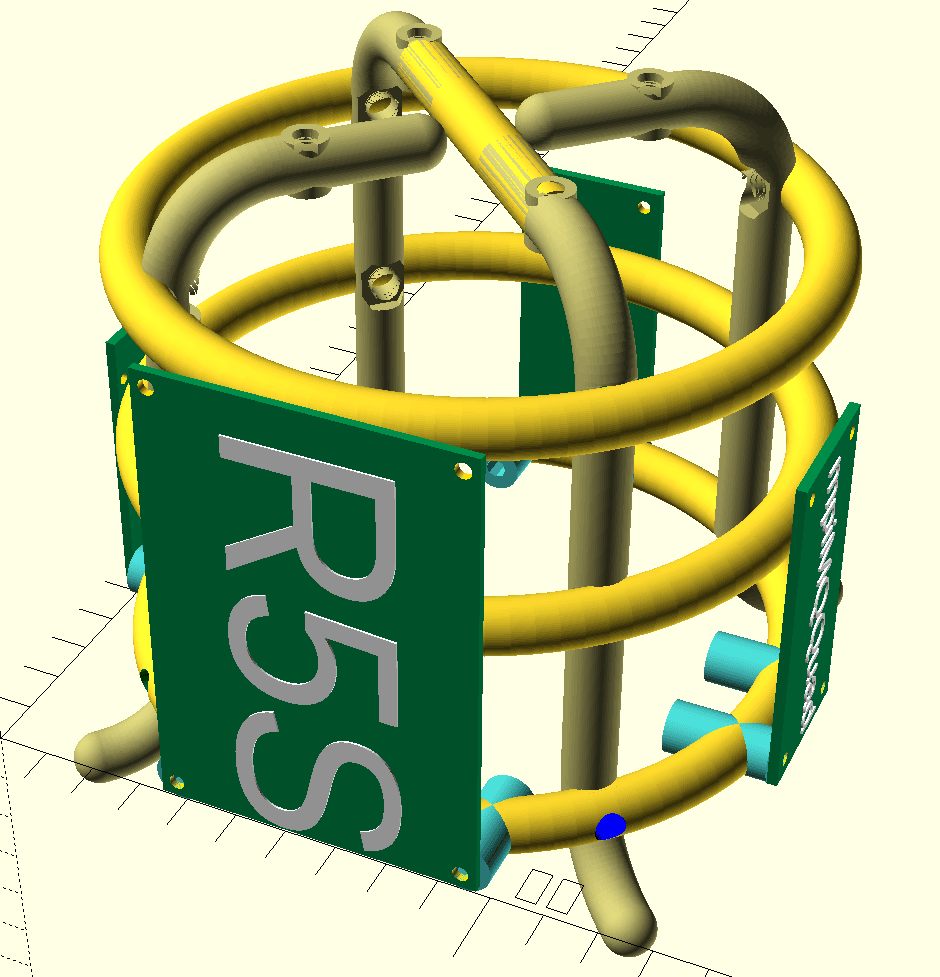

![PinePower powering very astemious boards picture courtesy of HackaDay’s author https://hackaday.com/author/aryavoronova/[Arya Voronova]](http://www.jenkins.io/images/post-images/2023/03/03/2023-03-03-miniJen-is-alive/pine-power.png)

Contributed by:

Contributed by: